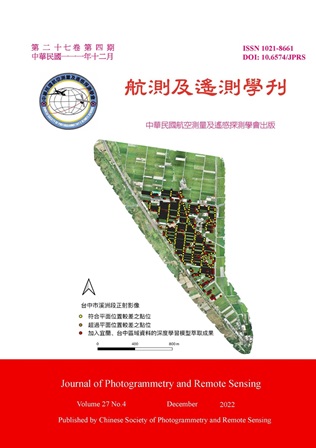

Currently, the detail data is mostly surveyed by theodolites and satellite positioning instruments; however, it is time-consuming and labor-intensive. Additionally, the surveying result is usually local data. Recently, UAVs are increasingly being used as a low-cost, efficient system which can support in acquiring high-resolution data; therefore, this study attempts to use ResU-net to assist in extracting global terrain detail information from high-resolution UAV orthoimages of farm land readjustment areas, and evaluate the feasibility of using the post-processing results in the detail survey. Except for the high-resolution orthoimages, the digital surface model (DSM) by dense matching was also used. The results showed that if the label data covered the elevation changes, adding DSM data by dense matching could promote the accuracy of detection. The F Score in Yilan testing data was 0.73; Taichung testing data was 0.86. In terms of the planar position difference, the result showed that about 80% data meet the accuracy requirement and it demonstrated the feasibility of using deep learning to assist in extracting global terrain detail information for farm land readjustment areas.